Kill the Cover Letter and Résumé

By Jesse Singal1.5kShares

Share1.2kTweet159Share160Share817EmailPrint

No job applicant has ever enjoyed the process of assembling a decent cover letter and résumé. There’s the endless scanning for errors and out-of-date information, the pointless attempts to make your materials stand out (are you “well-qualified” for the job, or just “qualified”? “Enthusiastic” or “very enthusiastic”?), and the overall soul-crushing feeling that comes from knowing your application will likely end up in a pile of hundreds of others that look just about identical to it. Employers aren’t fans, either — anyone who has waded through a stack of the things understands how exhausting it is to try to sift fact from fiction, qualification from “qualification.” Despite all of this, the system has been entrenched for a while now. It’s just how it’s done.

Now, though, researchers have finally built up enough solid science about human decision-making to confirm a belief held by many on both sides of the hiring equation: It’s time for the résumé and the cover letter to die.

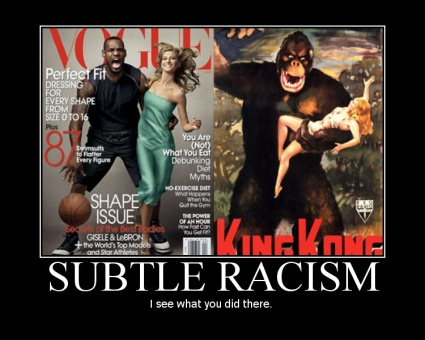

The problem is that the résumé-and-cover-letter bundle — call it “the packet” from here on — is an inefficient, time-wasting way for employers to sort through a first wave of applicants. It doesn’t provide nearly as much useful information about potential employees as we’ve been led to believe, meaning that firms that overly rely on it are likely missing out on talented applicants whose materials get overlooked. What’s worse is that it’s discriminatory — it exacerbates many of the biases that fuel a winner-take-all job market at the expense of minorities and people without fancy connections.

The latter claim might sound odd, given that the information contained in these packets seems pretty straightforward and objective — name, alma mater, things like that. But psychologists and sociologists have shown convincingly that biases powerfully affect our ostensibly “objective” perceptions of others, and that the packet contains exactly the sorts of information that can trigger these biases.

This is far from a bleeding-heart position: There’s a mountain of evidence, and it’s growing seemingly by the week. If you send out identical résumés with white-sounding and black-sounding names, thewhite-sounding ones will receive more invitations for interviews. You have better odds of being seen as likable and capable if you have a “normal”-sounding name. If you ask subjects of an experiment to proofread a writing sample, they’ll find more errors if told the author is black. If you pose as a student and send a professor an email looking for more advice, they’re less likely to help you out if you have a name that isn’t white- and male-sounding. And if someone reviewing your job application sees that you’re a mother, you’ll often be penalized for it (less likely if you’re a father, however).

So the packet is a minefield of information that could trigger bias, from the basic demographic information revealed by a résumé to the endless name-drops of impressive achievements and associations on a cover letter. “Why does a company need to know your name or where you live?” asked Nathanial Peterson, a Ph.D. student who studies biased decision-making at Carnegie Mellon, in an email. “This invites race, ethnicity, and gender bias that is known to be prevalent in hiring decisions.” Christopher J. Collins, a human resources management professor at Cornell, agreed. “I think people list things on there that potentially give lots of insight into race, gender, things that firms are not supposed to use to make hiring decisions,” he said.

Katherine Milkman, the Wharton professor who ran the emailing-professors study, points out just how widespread this issue is. “Our work shows that it’s still a major problem, even in highly educated populations where you might not expect to see it, and that it is the case across a very wide swath of different groups,” she said. “So we discriminate against women and ethnic minorities who are black, Hispanic, Chinese, and Indian. It’s not like just one group is being harmed — everyone is being harmed here relative to Caucasian males. And obviously if we care about hiring the best people, promoting the best people ... then it’s problematic if we’re allowing ourselves to be influenced in any way by race or gender.”

This extends well past questions of race and gender, though. Valerie Purdie-Vaughns, a psychology researcher at Columbia who specializes in intergroup and diversity issues, explained that once we see what school someone went to, for example, it greatly affects how we subsequently evaluate their work. The Harvard applicant and the State U - Satellite Campus applicant are graded on different curves, in other words, even if they’re equally capable.

Take evaluations of writing samples. “Once you assume that someone’s smart, a whole other set of things goes on,” said Purdie-Vaughns. “One of the things that happens is that you tend to evaluate whatever they wrote in terms of looking for positives instead of looking for negatives. So the person who went to the small state school, you’re looking for mistakes, you’re looking for typos, you’re looking for when they are saying something that doesn’t make any sense, and that confirms your bias that they’re not what you’re looking for. The Harvard applicant, you’re looking for the positive: all of the parts of their cover letter that are coming together for you, and you actually tend to avoid looking at things like the typos or the mistakes. And when you do see things that are either mistakes or errors or red flags, you interpret them differently. You interpret them as, ‘Oh, this person must have been really tired putting together this cover letter because they work so hard.’ Or, ‘Oh, they must be really busy.” In evaluating the sample of the applicant who went to the small state school, on the other hand, “you tend to interpret mistakes as diagnostic of their ability.”

Purdie-Vaughns is referring to confirmation bias, a well-documented phenomenon in which people tend to seek out evidence confirming what they already know (Harvard grads are smart; State grads? Not so much) while ignoring evidence to the contrary. Falling into this trap doesn’t make a hiring manager a bad person, or classist, or anything else — it just makes them human.

That’s the problem: perfectly good, open-minded, non-discriminatory people can end up making these errors. And the context in which hiring decisions are made — long days spent amidst towering piles of application packets—only makes it easier for bias to creep in. “Most of the time people making hiring decisions, we’re tired!” said Purdy-Vaughns. “The more tired you are, that exacerbates each of these biases.”

But even for firms unconcerned with promoting diversity, there’s another good reason to do away with the packet: many researchers think it’s not a very good predictor of what skills an applicant has.

“The broader issue in this kind of biased decision-making is people overinterpret the résumés,” said Collins. “Let’s say you list that you’re a reporter and have done a certain number of stories. I might read into that skills you have that maybe you have, and maybe you don’t.” So “people may get selected in or out [or a potential job] by a biased interpretation of that experience.” Frank L. Schmidt, a University of Iowa professor emeritus who is a leading researcher of prospective-employee evaluation methods, echoed this view. “Even when résumés are honest, an emphasis on résumés often leads employers to focus on credentials per se, which have extremely low validity for predicting future job performance,” he said in an email. The same logic applies for cover letters, of course, since they are little more than opportunities for applicants to stack credential atop credential.

So if résumé and cover letters are so bad, how should companies evaluate applicants? There are a few different options.

At the let’s-just-tweak-it end of the spectrum, employers could choose to rely on the same information currently communicated via the packet, but to do so in an anonymized way. There’s a famous study based on this idea that comes from a world far removed from the paperwork and fluorescent lights of most workplaces: symphony orchestras. In a paper published in 2000, Claudia Goldin of Harvard and Cecilia Rouse of Princeton examined a bunch of data from orchestras that adopted “blind” auditions in which screens concealed the identity of the performers. The screens were introduced in part to chip away at the boys’ club that had developed as a result of the old way of doing business, in which members of orchestras were “largely handpicked by the music director,” as the researchers wrote, and mostly drawn from a pool of “(male) students of a select group of teachers.”

Goldin and Rouse’s number-crunching found that adding the screen did, in fact, account for a good chunk of the then-recent increase in female orchestra members (another chunk was explained simply by the fact that more were applying), meaning that without the screen these women wouldn’t have gotten their jobs simply by virtue of their gender.

A screen’s not going to work for hiring a new manager of operations, of course. But the overall lesson of the orchestra study could easily be applied to hiring decisions: Firms could institute partial “screens” by obscuring certain information from résumés. “One could have information converted into a less potentially biasing format — ‘above average school’ rather than the specific school,” said Kuncel. Katherine Phillips, a Columbia University professor, had a similar idea: instruct applicants to submit résumés with only “initials or a numbered code,” which “would essentially remove gender and race information,” she said in an email.

But for jobs in fields like academia, journalism, or coding that require concrete outputs, there’s a case to be made to go even further: have the first step of the application process be a full-blown sample work assignment, submitted anonymously. Either you can do the work, or you can’t — your potentially biasing race and gender and educational characteristics aren’t even revealed until at least the second round, if you make it that far. (Some companies, particularly those in the tech world, are starting to move in this direction.)

“That would be a much better predictor of your actual ability to write a story or do an investigation,” said Collins. “You’d be testing for the actual job skills that would be most important for success.” Purdy-Vaughns said the advantage of this sort of system would be that it doesn’t completely disregard information like what school someone went to, which “is probably like 5 percent or 8 percent important, [but] not 80 percent important,” as she put it — but does put it in the proper place. And ideally, such a form of hiring could lead to a more diverse applicant pool once applicants realized they’d be judged on the quality of their work rather than on the fanciness of their credentials. (The common knock on this idea is that it would create more HR work for firms, but HR departments are already inundated by packets from unqualified applicants under the current system and a more in-depth first step could cull the field in a useful way.)

Finally, there are tests that employers can have applicants take online to gauge who will be a good fit for a position. Researchers have found that high scores on (methodologically sound) tests of intelligence and of conscientiousness tend to accurately predict who will be a good worker, regardless of the field. Kuncel explained in an email that there is a plethora of such tests firms can use: “knowledge tests, cognitive ability tests, measures of personality, vocational interest measures, [and] integrity tests,” among others. Organizational researchers have produced a mountain of literature attempting to figure out which tests are most valid for which position, and the answer can vary hugely from job to job. Hubert Feild, an Auburn management professor and co-author of the textbook Human Resource Selection, mentioned a self-administered personality inventory Texas Instruments has used in the past on its employment website. “That was their way of screening out people who were team not oriented, not a team player,” he said. “So they were able to screen people out without bothering with a résumé.” Whatever sort of test or tests a given firm decides to administer, what’s crucial is that it can be done anonymously so as to curtail all the various aforementioned forms of bias. (Yes, these tests could hypothetically be taken by an applicant's smarter friend, but firms will usually retest an applicant in a proctored manner right before extending a job offer as a check against this.)

It's clear that companies serious about stripping bias from their hiring processes have no shortage of options. What's less clear is exactly how human judgement should factor into the process, particularly when it comes to the tricky question of evaluating how easy a candidate will be to interact with on a daily basis. “People talk a lot about cultural fit, and ‘cultural fit’ can certainly be a euphemism for hiring people who are like ourselves,” said James Baron, a professor at the Yale School of Management, “but to the extent that people work interdependently, that kind of information can be really helpful as to the likelihood of the person fitting on the team.” Here, too, there are ways to reduce bias. So-called unstructured interviews in which there's no set script of questions are, despite their ubiquity, seen by researchers as a terrible way to make personnel decisions. Structured interviews, on the other hand, can be useful when conducted in a rigorous manner.

So should structured interviews always be a part of the process, then? For less team-oriented jobs, is it okay to sometimes hire blind, without even meeting the candidate in person? Where, in short, should the line should lie between assessment measures that can be delivered in cold numerical fashion by a computer, and those that require human evaluation, warts and all? “The question is what the cost benefit is between getting rid of implicit bias and information that has real signal value,” said Milkman. “Academics have not answered that question.”

No comments:

Post a Comment